To clarify, we are not anti-AI. We know there are brilliant use cases for the broad collection of technologies that fall into that category. We are, however, against the headlong rush into using generative AI because others are and because marketers and big tech tell us we must.

Each day I open my news feeds to see more and more on the negatives about the haphazard rush to integrate "AI" into everything. AI is in quotes there because this almost always means generative AI or Large Language Models (LLMs). We've been integrating components of AI such as Machine Learning (ML), Computer Vision (CV), and Natural Language Processing (NLP) or many years now with minimal fanfare. However, this time around it seems that the marketers have gleaned onto something they can sell for big bucks. Tech unsavvy middle managers and executives have caught the bug and defined themselves around the tool, as well. This is why you hear so much about "AI" these days.

Unfortunately, it's just not panning out for most and it's going to be a hard fall for those who have defined themselves by the technology. For those of us who have been in the tech industry for more than a few years, we know this is cyclical. Something new and cool comes onto the scene, wows those without much domain knowledge, and quietly fades away into a minimal existence. It never goes away completely because they do have good use cases but they aren't the silver bullet for everything that some people believe.

We saw the same with blockchain a few years ago. The "distributed ledger" technology was going to save us from all our ills. Everything was going onto the blockchain so it was immutable and couldn't be disputed. Everyone was hyping crypto currency. Fast forward to 2025 and fiat currency hasn't fallen. "The blockchain" hasn't replaced traditional infrastructure in any way. And blockchain hasn't even taken hold in it's one perfect use case: supply chain tracking. It's just fizzled away into almost nothingness.

At least with blockchain, there weren't glaring vulnerabilities in the mix. Unfortunately, many seem to be ignoring some pretty dangerous vulnerabilities in AI and the way we use it.

"Vibe coding" is the latest hotness in the LinkedIn scene. It's also the latest meme among skilled developers. The jokes basically go something like this:

- Person who knows nothing about software development thinks they can get rich vibe coding the next killer app.

- Person asks Gen AI platform for all the code and cobbles it together without reviewing or even understanding how it works.

- App is breached within minutes of publication and attackers usurp person's cloud account racking up big bucks in hosting fees.

- Person gets an exorbitant bill from said cloud platform.

- Person is still clueless about what went wrong.

There are even dangers with experienced software developers relying too heavily on GenAI to create code. Since GenAI models train mostly on the open Internet there are plenty of ways to inject malicious code into prompt responses. There has been a noticeable uptick in poisoned module repositories, as well. If an experienced software developer blindly uses code from the LLM or even blindly trusts a module or other component just because they come from a trusted library, that could mean more and more breaches down the road.

Consider this: Would you trust a surgeon if they walked in with a laptop and asked an LLM for each step of the procedure they were going to perform on you?

Before you say "well, software isn't a life or death situation", let me correct you. There have been deaths attributed to cyber attacks on the healthcare industry. Hospitals closed or degraded due to a ransomware attack and emergency cases have nowhere to be redirected in time. Labs not completed in time to provide a patient the correct lifesaving care. These aren't just possibilities any longer. They have happened. People have died.

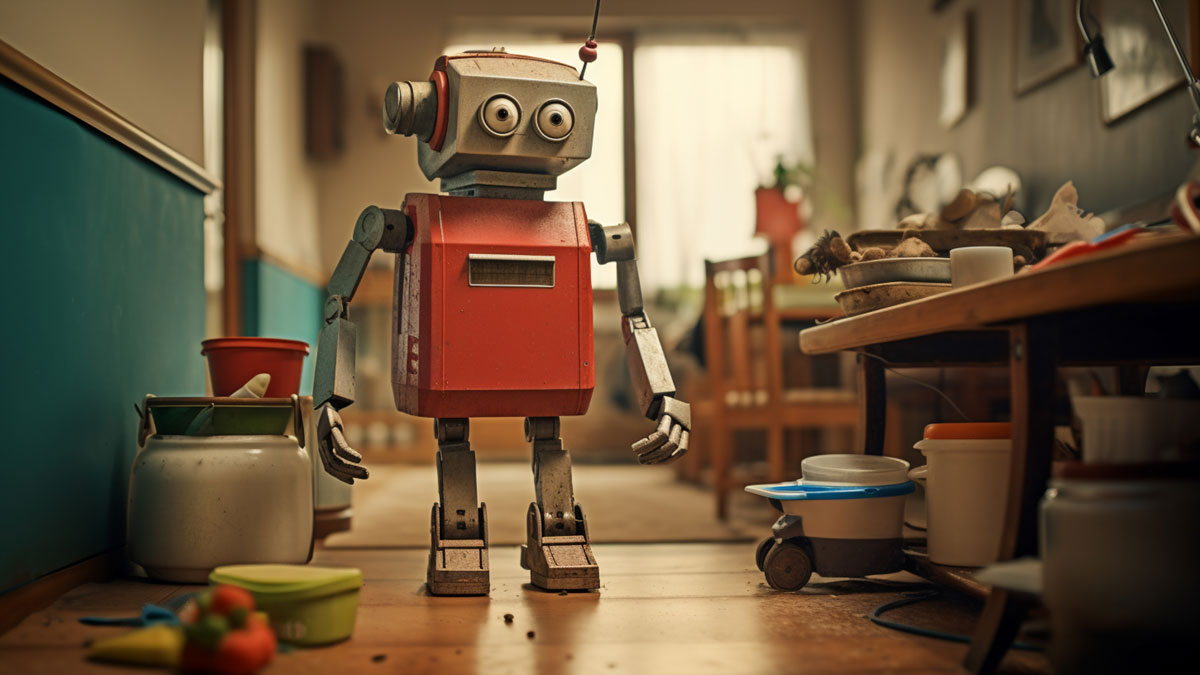

On the good side, it seems that there is a growing snowball of companies that are retreating from the drunken rush to integrate GenAI/LLMs into everything they do. Companies that replaced customer service agents with chatbots are starting to turn back. This feels very similar to the off-shoring reversal many went through within the last few decades. People want to talk to folks like them. Not agents half-way around the world. Not robot agents.

Unfortunately, you still have some very notable crazies out there who have defined themselves by AI. They are going to need an intervention and probably some deprogramming. Eventually, though, we'll get to the point where truly useful AI tools are properly integrated into our daily processes and you won't have to hear all the hype any longer. Until then, be mindful of what's being pushed and know that you aren't wrong to be skeptical.

References:

https://www.darkreading.com/application-security/next-gen-developers-cybersecurity-powder-keg

https://www.darkreading.com/vulnerabilities-threats/generative-ai-exacerbates-software-supply-chain-risks

https://www.securityweek.com/new-echo-chamber-jailbreak-bypasses-ai-guardrails-with-ease/

https://www.theregister.com/2025/06/13/cloud_costs_ai_inferencing/

https://www.theregister.com/2025/06/11/gartner_ai_customer_service/